RunPod vs Google Colab: Features, Costs, and GPU Performance Compared

Cloud GPUs have revolutionized how we train and deploy machine learning models. They bring scalability, flexibility, and performance that local hardware often can’t match. But with so many providers out there, picking the right one feels like a gamble.

Two names that often pop up in the discussion are RunPod and Google Colab. Both offer access to powerful GPUs, but they go about it in very different ways. Whether you’re chasing lightning-fast deployment or just want a free, accessible setup, these platforms have something to offer.

In this post, we’ll compare RunPod and Google Colab side by side. From GPU models to pricing and usability, we’re diving into the details so you can choose the right tool for your AI workflow. No fluff, just facts—let’s get into it.

Table of Contents

RunPod vs Google Colab: Overview

| Feature | RunPod | Google Colab |

| GPU Models | Offers a wide range of GPUs, from entry-level (RTX 3070) to high-performance (NVIDIA H100). | GPUs vary by plan; includes free-tier Tesla T4 and enterprise-grade Tesla A100/V100. |

| Pricing | Hourly rates start at $0.13; high-end GPUs up to $3.49/hour. | Free tier available; paid plans range from $0.42/hour to $4.71/hour for enterprise GPUs. |

| Ease of Use | Advanced features (e.g., custom containers, CLI tools); suited for experienced developers. | Beginner-friendly with pre-installed libraries; no setup required. |

| Collaboration | Secure but lacks real-time editing; relies on external tools like Git for team collaboration. | Real-time collaboration with Google Drive integration; GitHub support for version control. |

| Deployment | Nearly instant GPU deployment; supports custom containers and serverless autoscaling. | Simple Jupyter Lab interface; limited deployment flexibility, tied to Google’s infrastructure. |

| Scalability | Serverless autoscaling for dynamic workloads. | No autoscaling; limited runtime on free and paid plans. |

| Storage | Flexible persistent and temporary storage; network storage up to 100TB+. | Integrated with Google Drive; scalable options with Google Cloud Storage (extra cost). |

| Use Case | Ideal for professionals needing tailored environments and high performance. | Best for beginners, students, and lightweight machine learning or data analysis projects. |

RunPod vs Google Colab: GPU Models and Pricing

RunPod

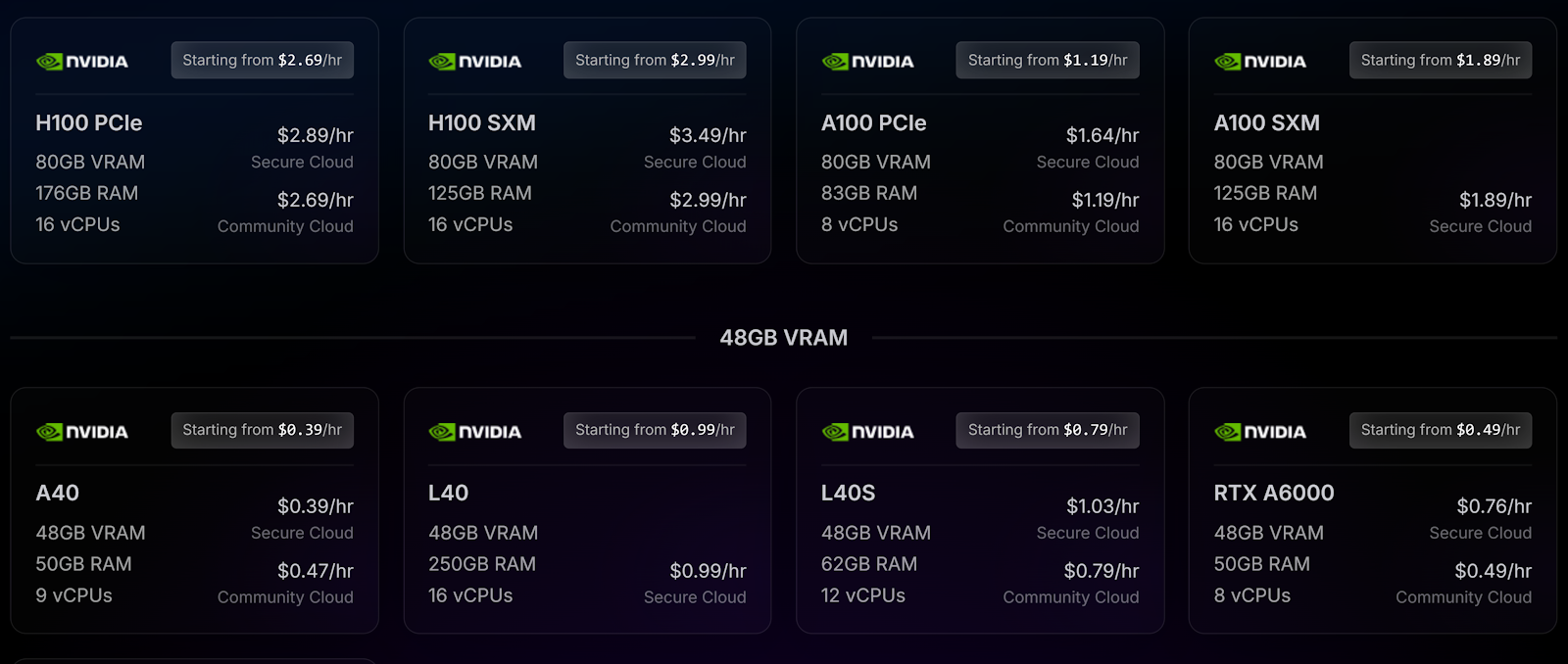

RunPod offers a diverse range of GPUs tailored for both casual users and professionals. Its flexible pricing model, starting from as low as $0.13/hour, ensures accessibility for all levels of machine learning practitioners. Here’s a breakdown of the GPUs available on RunPod:

1. High-Performance GPUs

- NVIDIA H100 PCIe (80GB VRAM)

- Specs: 188GB RAM, 16 vCPUs.

- Price: $2.69/hour (Secure Cloud).

- Use Case: Ideal for large-scale deep learning tasks, generative AI, and large language model training.

- Benefits: Exceptional memory capacity and processing power for tackling massive datasets and models.

- NVIDIA H100 SXM (80GB VRAM)

- Specs: 125GB RAM, 16 vCPUs.

- Price: $2.99/hour (Community Cloud).

- Use Case: High-performance computing with an emphasis on energy efficiency and speed.

- Benefits: Optimized for advanced AI workloads requiring rapid inference and training speeds.

2. Mid-Range GPUs

- NVIDIA A100 PCIe (80GB VRAM)

- Specs: 83GB RAM, 8 vCPUs.

- Price: $1.19/hour (Community Cloud).

- Use Case: Training deep neural networks and AI model prototyping.

- Benefits: Cost-efficient and scalable for projects with medium computational needs.

- NVIDIA RTX A6000 (48GB VRAM)

- Specs: 50GB RAM, 8 vCPUs.

- Price: $0.76/hour (Secure Cloud).

- Use Case: Graphics rendering, video editing, and smaller AI models.

- Benefits: Balances cost and performance for versatile applications.

3. Entry-Level GPUs

- NVIDIA RTX 3090 (24GB VRAM)

- Specs: 24GB RAM, 8 vCPUs.

- Price: $0.22/hour (Community Cloud).

- Use Case: Entry-level deep learning and data science projects.

- Benefits: Affordable and sufficient for smaller-scale machine learning models.

- NVIDIA RTX 3070 (8GB VRAM)

- Specs: 14GB RAM, 4 vCPUs.

- Price: $0.13/hour (Community Cloud).

- Use Case: Lightweight machine learning tasks and exploratory data analysis.

- Benefits: Extremely budget-friendly and accessible to beginners.

Google Colab GPU Models and Pricing

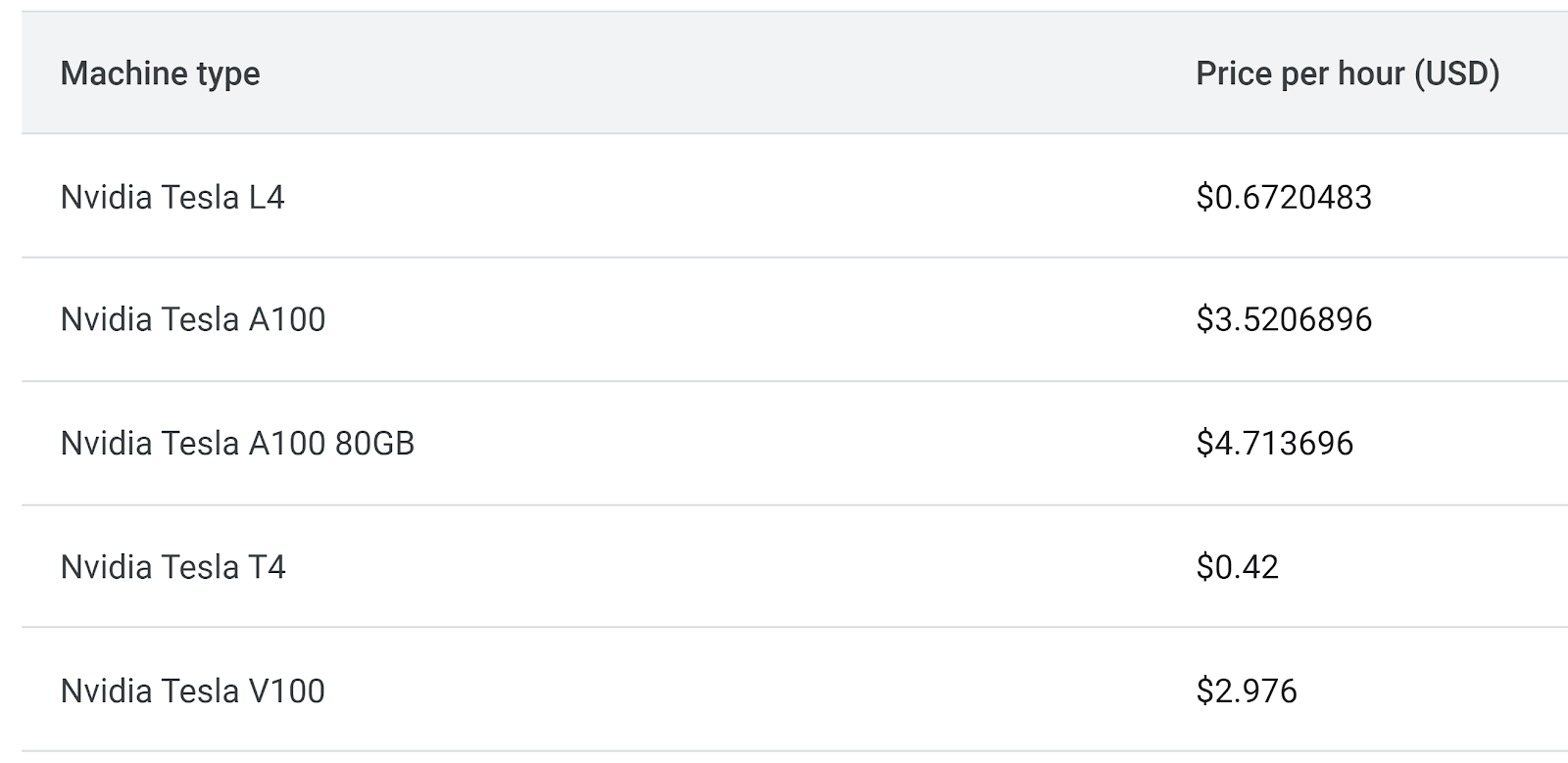

Google Colab’s tiered plans cater to different levels of users, from free access to enterprise-grade performance. Its compute unit pricing structure adds flexibility to its offerings.

1. Free Plan GPUs

- NVIDIA Tesla T4

- Specs: Cloud-hosted, specifics not disclosed for free-tier resources.

- Price: Free.

- Use Case: Small-scale machine learning experiments and data preprocessing.

- Benefits: Zero cost, but resources are limited and shared among users.

2. Enterprise Plan GPUs

- NVIDIA Tesla A100 (40GB and 80GB variants)

- Specs: High memory, enterprise-grade performance.

- Price: $3.52/hour (40GB), $4.71/hour (80GB).

- Use Case: Intensive AI tasks, large-scale simulations, and complex model training.

- Benefits: Reliable, with tight integration into Google Cloud services.

- NVIDIA Tesla V100 (32GB VRAM)

- Price: $2.97/hour.

- Use Case: Advanced deep learning applications.

- Benefits: Combines power and efficiency for demanding AI workloads.

3. Other Plans’ GPUs

- Google Colab Pro and Pro+ Plans provide access to upgraded GPUs and additional memory, but specific GPU details vary based on availability and user priority.

Pricing Structure Comparison

- RunPod:

- Hourly billing.

- Pricing starts at $0.13/hour, scaling to $3.49/hour for premium GPUs like the AMD MI300X.

- Ideal for users with specific GPU needs and tight budgets.

- Google Colab:

- Compute unit-based billing for paid plans.

- Free access with limited resources.

- Enterprise plan pricing ranges from $0.42/hour to $4.71/hour, with compute unit expirations adding complexity.

Features Comparison

Deployment and Scalability

RunPod

RunPod excels in delivering high-performance cloud server solutions with nearly instant GPU deployment. With cold-start times reduced to under 250 milliseconds, users can spin up faster GPUs almost instantly, allowing them to jump straight into their workloads without delays. This feature is a standout for developers and researchers managing time-sensitive projects.

RunPod’s serverless autoscaling capabilities provide seamless adjustment to workload demands. Whether your tasks are small or require large-scale GPU clusters, RunPod dynamically scales resources to match the load, ensuring cost efficiency and uninterrupted performance.

Another key advantage is its support for custom containers, offering users full control over their environment. With the ability to deploy any image, developers can tailor their setup for specific use cases, whether it’s machine learning, rendering, or inference tasks. This flexibility makes RunPod a great option for users with diverse or complex workloads.

Google Colab

Google Colab simplifies the deployment process with its intuitive Jupyter lab interface. This streamlined setup allows users to write and execute Python code directly in their browser, eliminating the need to configure a separate environment. For beginners or those who prefer working within Google’s ecosystem, this ease of use is a significant advantage.

However, Google Colab is inherently tied to Google’s infrastructure, which restricts its deployment flexibility. Users cannot deploy their own images or utilize custom containers as they can on RunPod. This limitation can be a barrier for developers requiring a more tailored setup.

While Google Colab offers longer runtimes and priority access to resources in its paid versions (Pro, Pro+), its free version has constraints, such as capped runtimes and limited GPU availability. Additionally, real-time autoscaling isn’t supported, making it less ideal for tasks requiring dynamic resource allocation.

Both platforms cater to different audiences: RunPod appeals to those needing rapid deployment and full customization, while Google Colab shines for its accessibility and integration with Jupyter Lab. The choice depends on whether you prioritize flexibility or convenience in a cloud server setup.

Ease of Use

RunPod

RunPod caters to developers who are already familiar with containerized workflows and want a streamlined experience in deploying and managing GPU workloads. Its comprehensive command-line interface (CLI) tools make integration into existing workflows seamless. These tools enable developers to automate tasks like spinning up GPU instances, managing deployments, and hot-reloading changes in real-time. This eliminates the need for repetitive manual steps and significantly boosts productivity.

For those comfortable working with Docker, RunPod offers extensive support for custom containers. Developers can deploy their own environments, ensuring compatibility with specific tools, dependencies, or frameworks. This flexibility makes RunPod a great choice for professionals who require a tailored setup or have advanced deployment needs.

Despite its advanced features, RunPod’s interface might feel less intuitive for beginners unfamiliar with CLI tools or Docker. However, for experienced developers, its design provides unmatched control and efficiency.

Google Colab

Google Colab takes a different approach, focusing on ease of access and beginner-friendliness. It requires no prior setup or knowledge of Docker, making it a popular choice for students, researchers, and developers who want to dive into machine learning or data analysis without worrying about infrastructure. With pre-installed libraries like TensorFlow, PyTorch, NumPy, and Pandas, users can jump straight into coding. This eliminates the hassle of manually configuring environments, saving time for those working on smaller or exploratory projects.

Integration with Google Drive is another major advantage. Users can easily store their notebooks, datasets, and outputs in the cloud, ensuring they are accessible from anywhere. This feature also simplifies collaboration, as files can be shared and edited in real time by multiple users.

While its simplicity is a strength, Google Colab may not provide the same level of control or customization as RunPod. For users needing advanced configurations or working with large-scale projects, Colab’s limitations, like runtime restrictions and lack of custom container support, can be a drawback.

Google Colab excels in accessibility and ease of use for beginners, while RunPod is tailored for developers who need robust tools and customizable environments. The choice depends on your expertise and the complexity of your workflows.

Deploy NVIDIA H100 and H200 GPUs on CUDO Compute with industry-leading memory bandwidth, perfect for large-scale deep learning and generative AI tasks. Sign up now!

Collaboration

RunPod

RunPod prioritizes enterprise-grade security, making it an excellent choice for teams handling sensitive data or projects that require stringent compliance standards. It supports private and public container repositories, ensuring that teams can collaborate securely on containerized workflows. This is particularly beneficial for organizations with custom environments or proprietary tools.

However, RunPod lacks real-time editing features, which can make collaboration less seamless compared to other platforms. Developers working on shared projects must rely on external tools or version control systems like Git to synchronize changes. This approach may add an extra layer of complexity, especially for teams distributed across different locations.

Despite this, RunPod supports advanced workflows by providing customizable templates and containers, enabling efficient collaboration among developers familiar with Docker. While it may not offer real-time edits, its security features and scalability make it suitable for professional teams prioritizing control and compliance over immediate convenience.

Google Colab

Google Colab shines in collaborative features, offering real-time editing and commenting capabilities. Teams can work together on a shared notebook, with changes visible instantly to all participants. This feature is particularly advantageous for remote teams, as it fosters smooth communication and rapid iteration on projects.

Colab also integrates with GitHub, enabling users to import, export, and manage their notebooks directly through version control. This integration supports tracking changes and synchronizing work across team members, reducing the chances of conflicting edits.

However, while Google Colab’s real-time collaboration is a strong point, its lack of robust security features might not be ideal for sensitive enterprise projects. Additionally, collaboration heavily relies on Google Drive, which could limit flexibility for teams using non-Google ecosystems.

Google Colab’s collaboration tools are unmatched for real-time teamwork and remote projects, while RunPod offers superior security for more controlled, enterprise-level collaborations.

Storage and Networking

RunPod

RunPod offers a robust storage infrastructure designed for flexibility and high-performance workloads. Users can choose between persistent and temporary storage options, allowing for tailored solutions depending on project requirements. Persistent storage ensures data is retained across sessions, while temporary storage is ideal for short-term tasks.

The platform supports up to 100TB+ of network storage, backed by NVMe SSDs, ensuring high-speed data access. This is especially useful for data-intensive tasks like training large machine learning models or processing extensive datasets. RunPod also provides customizable pod volumes and container disk configurations, giving users control over storage allocation.

Pricing for storage starts at $0.07 per GB per month, with discounts available for larger volumes. This cost-effective structure makes it an appealing choice for developers and enterprises managing substantial data workloads.

RunPod’s storage seamlessly integrates with its GPU pods, reducing data transfer delays and ensuring efficient workflows. Its network storage is designed for high throughput, supporting demanding AI and ML workloads.

Google Colab

Google Colab leverages Google Drive for storage, providing an easy-to-use solution for saving and sharing notebooks. While convenient, this storage model may incur additional costs for larger volumes, especially if a project exceeds the free Drive storage limit. For enterprise users, Colab integrates with Google Cloud Storage, enabling scalable solutions for large-scale data requirements.

Data saved in Google Drive can be accessed from anywhere and shared effortlessly, supporting team collaboration. However, Drive’s reliance on internet connectivity might slow down workflows for users in regions with limited bandwidth.

Google Colab’s storage is tightly coupled with its ecosystem, which simplifies integration but limits flexibility for those who prefer third-party storage solutions. Despite these limitations, its seamless syncing with notebooks and support for importing datasets from various sources make it a practical option for lightweight projects.

RunPod offers superior flexibility and high-performance storage for demanding workloads, while Google Colab provides simple, integrated solutions for smaller projects. Both platforms cater to different needs based on the scale and complexity of the task.

Use Cases and Recommendations

When to Choose RunPod

- You need specific GPUs at competitive hourly rates.

- You prefer full control over your environment with custom containers.

- You require rapid deployment and scalability for time-sensitive projects.

When to Choose Google Colab

- You’re new to machine learning and need a beginner-friendly interface.

- You value real-time collaboration and Google Cloud integration.

- You’re working on smaller projects where free or affordable compute is sufficient.

Google Colab vs RunPod: Final Thoughts

Both RunPod and Google Colab excel in different areas, making them suitable for distinct user profiles. RunPod’s granular GPU offerings and flexible pricing cater to professionals who need performance and control. On the other hand, Google Colab’s ease of use and collaborative features make it an excellent choice for beginners and research teams.

Your choice boils down to your project’s demands, budget, and technical expertise. With this comparison, you’re now equipped to pick the best cloud GPU provider for your needs.