CoreWeave vs AWS: GPU Compute Power and Pricing Compared

If you’re working with GPU-accelerated workloads, you know how important it is to pick the right cloud provider. Whether you’re doing machine learning, VFX rendering, or any compute-heavy task, your choice impacts both performance and costs. CoreWeave and AWS are two solid options for handling these kinds of workloads, but how do they stack up against each other?

On one hand, CoreWeave claims faster speeds and lower costs, while AWS offers flexibility and a massive range of services. In this Coreweave vs AWS comparison, we’ll break down what each of these two brings to the table in terms of GPU models, pricing, and overall value.

Affiliate Disclosure

We are committed to being transparent with our audience. When you purchase via our affiliate links, we may receive a commission at no extra cost to you. These commissions support our ability to deliver independent and high-quality content. We only endorse products and services that we have personally used or carefully researched, ensuring they provide real value to our readers.

Table of Contents

GPU Compute Comparison

Let’s examine the GPU models, architectures, pricing, and use cases to help you make an informed decision.

Looking for budget-friendly, high-performance GPUs? CUDO Compute offers fast access to top-tier NVIDIA and AMD GPUs on demand. Sign up now!

o find out more about CUDO Compute, check out this video:

The Variety of GPUs Offered

When it comes to offering a variety of GPU models, both CoreWeave and AWS provide a robust selection. However, the two services differ in their approach and in the hardware they offer.

AWS GPU Models

AWS has a wide range of GPUs, including those from NVIDIA, AMD, and older models that are still powerful for certain tasks. Here’s a quick rundown of the major GPUs AWS offers:

- NVIDIA Tesla M60 (G3 instances): The NVIDIA Tesla M60 is an older but still capable GPU, primarily used for graphics-heavy workloads like 3D visualization, rendering, and gaming. It’s based on the Maxwell architecture and delivers decent performance for virtual desktops and workstations. This GPU is often used in scenarios where users need to work with 3D models or other visually intensive applications without requiring the latest hardware. The Tesla M60 can support multiple users per instance, making it a cost-effective option for graphics rendering at scale.

- NVIDIA T4 (G4dn instances): The NVIDIA T4 is one of the most versatile and cost-effective GPUs offered by AWS. It’s based on the Turing architecture and is well-suited for machine learning inference, small-scale AI training, and even some video processing.

The T4 can handle a variety of workloads, including natural language processing (NLP), recommendation systems, and image classification. With its balance of performance and cost, the T4 is often used in applications that require moderate GPU power but don’t justify the cost of higher-end GPUs like the A100. - AMD Radeon Pro V520 (G4ad instances): The AMD Radeon Pro V520 is a solid choice for those looking for high-performance graphics at a more affordable price. It’s ideal for use cases like game streaming, virtual workstations, and other graphically intensive applications.

The V520 is based on AMD’s Vega architecture and provides a good balance of power and price, making it a popular option for companies looking to save on GPU costs without compromising on performance. Its focus on gaming and 3D rendering makes it a competitor to NVIDIA’s T4 for similar use cases. - NVIDIA A10G Tensor Core (G5 instances): The NVIDIA A10G Tensor Core is a versatile GPU designed for a mix of machine learning inference and high-end graphics applications. It’s based on the Ampere architecture and provides a significant boost in AI inference capabilities while also excelling at tasks like video rendering, simulations, and graphic-heavy workloads.

The A10G is perfect for businesses that need both AI processing power and graphical rendering capabilities, making it a great fit for industries like media, entertainment, and healthcare that require advanced image processing. - NVIDIA A100 (P4 instances): The NVIDIA A100 Tensor Core is a powerhouse for deep learning and high-performance computing (HPC) tasks. It’s based on the Ampere architecture and supports large-scale distributed training, making it ideal for AI and machine learning model training at scale.

The A100 excels at accelerating large workloads in sectors like research, finance, and science, where heavy computational tasks like simulations and deep neural networks are common. With its ability to handle complex calculations, it’s a go-to choice for any application that requires massive GPU compute power. - NVIDIA H100 (P5 instances): The NVIDIA H100 is the latest generation of GPUs designed for cutting-edge AI solutions and high-performance computing. Based on the Hopper architecture, it offers unmatched performance for deep learning, especially for tasks like generative AI and advanced simulations.

The H100 is capable of scaling across thousands of GPUs, making it perfect for large-scale AI models and distributed training environments. Its advanced features, including faster interconnects and improved memory bandwidth, set it apart as the best choice for businesses needing the absolute best in GPU computing.

Boost your AI and machine learning projects with CUDO Compute’s powerful cloud GPUs— Sign up now!

CoreWeave GPU Models

CoreWeave’s bread and butter is NVIDIA GPUs, and they’ve tailored their offerings specifically for AI, rendering, and high-performance computing. Some of the standout models include:

- NVIDIA H100: The NVIDIA H100 is CoreWeave’s flagship GPU offering, tailored for the most demanding AI and machine learning tasks. Based on the Hopper architecture, the H100 delivers exceptional performance for tasks like large-scale model training, generative AI, and deep learning.

It’s designed to scale across distributed clusters, making it ideal for research institutions, AI startups, and enterprises working on cutting-edge projects. If you’re looking for the latest and greatest in GPU technology for AI, the H100 is the top choice, delivering both speed and scalability. - NVIDIA A100: The NVIDIA A100 is another high-performance GPU offered by CoreWeave, optimized for deep learning and high-performance computing tasks. While slightly less powerful than the H100, the A100 still excels in tasks like AI model training, simulations, and data analytics.

It’s based on the Ampere architecture, making it incredibly efficient for large-scale distributed training. With support for multi-instance GPUs (MIG), the A100 can be partitioned to handle multiple workloads simultaneously, giving you flexibility and power without the high cost of the H100. - NVIDIA A40 and A5000: The NVIDIA A40 and A5000 are designed for graphics-intensive workloads, including rendering, video editing, and complex visualizations. These GPUs offer a more affordable option compared to the A100 and H100, while still providing high-end performance for tasks like 3D modeling, CAD, and virtual production.

Both models are based on NVIDIA’s Ampere architecture and deliver excellent performance for professionals in media, entertainment, and design. If you need strong GPU power for creative workflows, the A40 and A5000 are solid choices. - RTX 4000 and 5000: The NVIDIA RTX 4000 and 5000 GPUs are popular for virtual workstations, offering high performance for graphics-heavy tasks. These GPUs are based on the Turing architecture and are commonly used for 3D rendering, game development, and complex visual effects.

They provide a cost-effective option for users who need good graphical performance but don’t require the heavy-duty power of the A100 or H100. These models are ideal for creative professionals working in design, animation, and video editing who need reliable performance without breaking the bank. - A4000, A6000: The NVIDIA A4000 and A6000 are highly versatile GPUs that are well-suited for both rendering tasks and AI model serving. These GPUs offer flexibility in switching between graphics workloads and computational tasks, making them ideal for industries like architecture, engineering, and media production.

The A4000 provides a more affordable entry point, while the A6000 delivers top-tier performance for more demanding tasks. Both models are based on the Ampere architecture and are widely used in creative industries where a balance of power and cost is essential.

Takeaway: While AWS offers a broader range of both NVIDIA and AMD GPUs, CoreWeave specializes in providing high-end NVIDIA GPUs tailored for compute-intensive tasks like AI model training and rendering. If you’re strictly looking for NVIDIA’s latest offerings for AI or deep learning, CoreWeave might be your go-to. But if you need a variety of older models, AWS has you covered.

Looking for scalable and flexible GPU cloud services for AI or rendering? CUDO Compute delivers reliable options at competitive rates. Sign up now!

GPU Architectures and Features

GPU architecture plays a crucial role in determining the performance of your workloads. Both providers offer some of the latest GPU architectures.

AWS GPU Architecture

- G3 Instances (NVIDIA Tesla M60): These GPUs are older, based on Maxwell architecture, but still deliver excellent performance for graphics-intensive tasks.

- G4dn Instances (NVIDIA T4): Based on the Turing architecture, optimized for both graphics and AI workloads.

- G4ad Instances (AMD Radeon Pro V520): Based on AMD’s Vega architecture, designed for price-performance efficiency in graphics-heavy workloads.

- G5 Instances (NVIDIA A10G Tensor Core): Based on NVIDIA’s Ampere architecture, these GPUs deliver powerful performance for machine learning and graphics workloads.

- P4 Instances (NVIDIA A100 Tensor Core): Featuring Ampere architecture, designed for large-scale AI training and HPC tasks, with support for multi-GPU setups and fast interconnects.

- P5 Instances (NVIDIA H100): Featuring Hopper architecture, these GPUs offer the highest performance for deep learning, generative AI, and complex simulations.

CoreWeave GPU Architecture

CoreWeave focuses exclusively on NVIDIA’s high-end GPUs, which leverage the latest architectures:

- NVIDIA H100: Based on the Hopper architecture, this GPU is a top performer for AI model training, with support for faster interconnects like GPUDirect and InfiniBand for distributed training.

- NVIDIA A100: Utilizing the Ampere architecture, it offers great performance for both AI training and inference, excelling in both speed and efficiency.

- NVIDIA A40, A5000: Ampere architecture, designed for rendering and graphics-heavy tasks, with exceptional price-to-performance ratios.

- RTX 4000, 5000: Turing-based architecture, optimized for graphics and professional workloads like virtual workstations.

Takeaway: Both providers offer cutting-edge GPUs, but CoreWeave focuses solely on the latest NVIDIA architectures, making it a better fit if you’re seeking the newest in GPU technology for AI and rendering tasks. Big tech companies like AWS and Google Cloud Platform offer a mix of both old and new architectures, providing more flexibility for diverse workloads.

CUDO Compute provides on-demand access to both NVIDIA and AMD GPUs, making it a great fit for AI, machine learning, and high-performance computing tasks. Sign up now!

Pricing Comparison

Price is often the deciding factor for many users. Both AWS and CoreWeave offer competitive pricing, but the structure differs slightly.

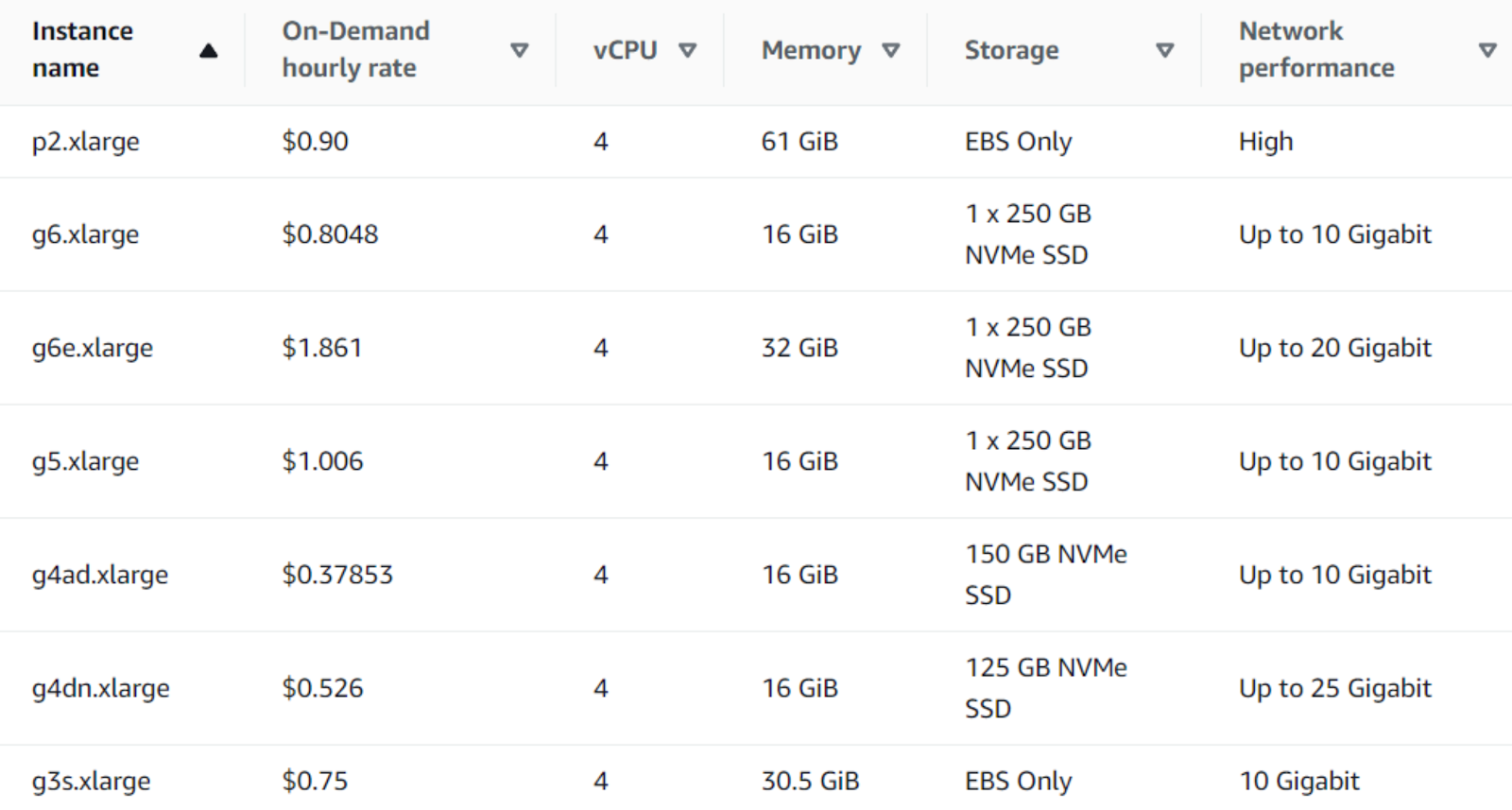

AWS GPU Pricing

AWS pricing is based on an on-demand, reserved instance, and spot instance model, offering flexibility depending on your commitment level.

- G3 Instances: Starting at $0.75/hr for the g3s.xlarge, with discounts available for 1- and 3-year reserved instances.

- G4dn Instances: Prices range from $0.526/hr for the g4dn.xlarge to $7.824/hr for the g4dn.metal.

- G4ad Instances: Starting at $0.379/hr for the g4ad.xlarge.

- G5 Instances: Prices start at $1.006/hr for the g5.xlarge and go up to $16.288/hr for the g5.48xlarge.

- P4 Instances: The powerful p4d.24xlarge costs $32.77/hr.

- P5 Instances: Pricing is yet to be released in full, but we can expect it to be on par or slightly higher than the P4 series.

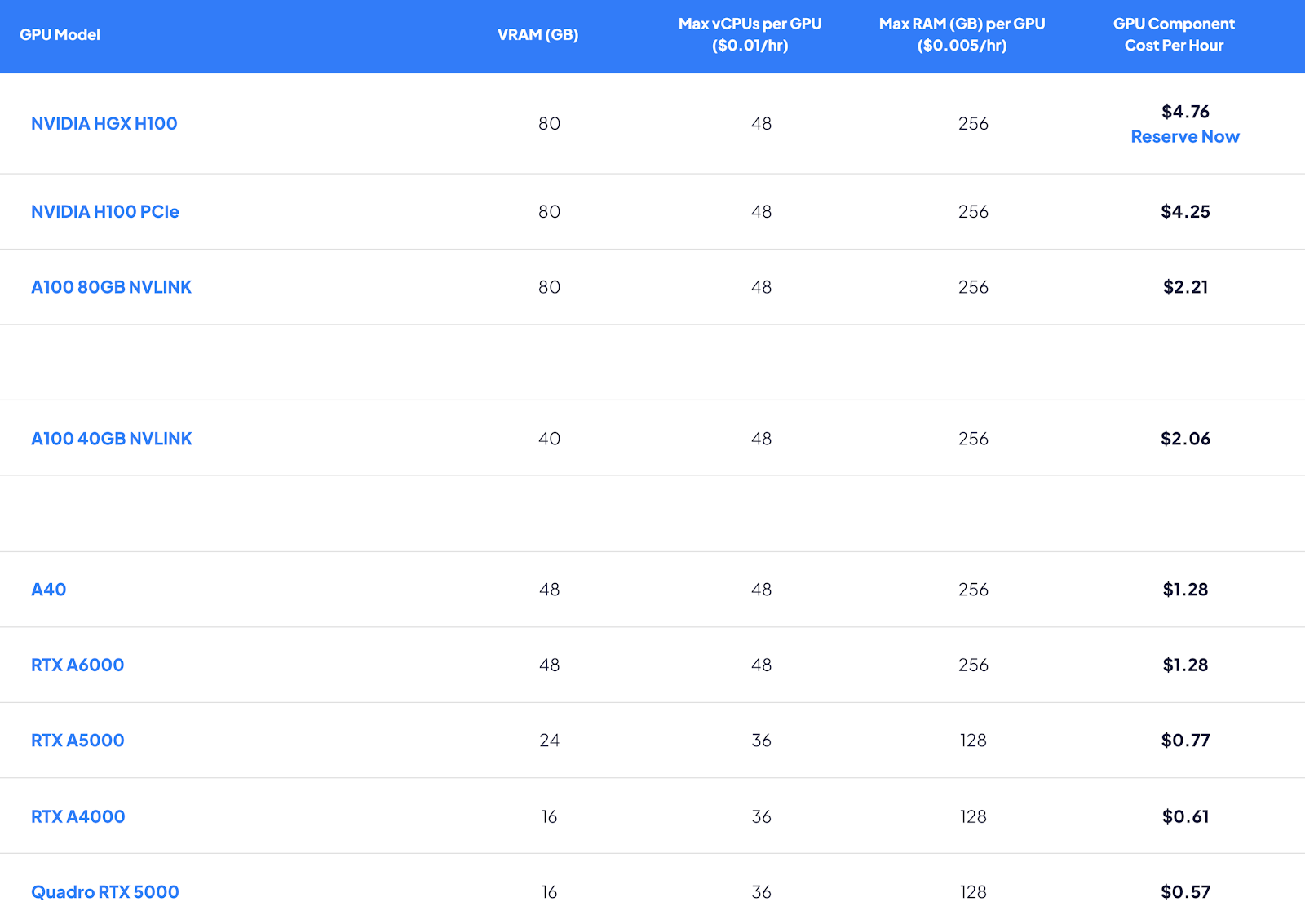

CoreWeave GPU Pricing

CoreWeave boasts savings of up to 80% compared to general cloud providers, thanks to their specialized focus. Their pricing is also highly transparent and flexible. Some examples:

- NVIDIA H100: Starts at $2.60/hr.

- NVIDIA A100: Starting at $1.60/hr.

- NVIDIA A40: Starting at $0.70/hr.

- RTX 4000: Starts at $0.45/hr.

CoreWeave’s pricing is straightforward, with no additional hidden costs, unlike some of the complex pricing models you may find with AWS.

Takeaway: CoreWeave generally offers lower prices than AWS, particularly for high-end GPUs like the A100 and H100. If cost-efficiency is a key factor, especially for large-scale AI workloads, CoreWeave will likely save you money. However, AWS does offer more flexible pricing options, including spot and reserved instances, which could be beneficial depending on your use case.

CUDO Compute delivers powerful and budget-friendly cloud GPU solutions tailored to your AI and machine learning needs. Sign up now!

Scalability and Availability

AWS Scalability

AWS operates globally with a massive data center footprint, offering GPU instances in multiple regions. AWS also provides features like Elastic Fabric Adapter (EFA), enabling you to scale workloads across thousands of GPUs for deep learning or high-performance computing (HPC) tasks.

Their EC2 UltraClusters provide market-leading scale-out capabilities with enterprise-grade security, supporting up to 20,000 GPUs with over 20 exaflops of compute capacity.

CoreWeave Scalability

CoreWeave is more specialized but still offers impressive scalability for GPU workloads. With a large fleet of NVIDIA GPUs and multiple data centers, CoreWeave ensures real-time, on-demand access to their GPUs and other virtual machines, making it ideal for elastic, bursty workloads like AI training, rendering, or data analytics.

CoreWeave also provides distributed training clusters powered by H100 GPUs, specifically optimized for machine learning.

Takeaway: If you need large-scale GPU resources, AWS’s vast cloud infrastructure is unmatched. However, for specialized AI and rendering tasks, CoreWeave offers the necessary scale with a focus on providing high-end NVIDIA GPUs.

Performance: Use Cases and Flexibility

AWS Use Cases

AWS is versatile and provides GPU instances for a wide range of use cases:

- AI/ML: P4 and P5 instances are great for large-scale deep learning models and distributed machine learning tasks.

- Graphics: G4dn and G5 instances are perfect for graphics-intensive applications, 3D rendering, game streaming, and virtual workstations.

- High-Performance Computing: P4 and P5 instances provide high-performance computing resources with industry-leading performance, making AWS a go-to for scientific simulations, complex analytics, and financial modeling.

CoreWeave Use Cases

CoreWeave is hyper-focused on compute-intensive tasks, particularly in:

- AI/ML: CoreWeave’s H100 and A100 GPUs are ideal for AI model training and inference at scale, leveraging GPUDirect and InfiniBand for distributed training.

- Rendering: Their A40, A5000, and A4000 GPUs are designed to handle heavy 3D rendering and visual effects, often used in industries like film and game development.

- Graphics: RTX 4000 and 5000 GPUs serve remote workstations, delivering high-end graphics performance for video editing, design, and other creative workflows.

Takeaway: AWS’s broad range of use cases makes it an excellent option if you need GPU resources for various workloads. CoreWeave, however, has a competitive edge with its focus on AI, machine learning, and rendering, offering exceptional performance and cost-efficiency for these specialized tasks.

CoreWeave vs AWS: Final Verdict

If you’re focused on NVIDIA GPUs, AI/ML workloads, or rendering, CoreWeave offers a more specialized and cost-effective solution. With lower prices and real-time access to high-end GPUs like the A100 and H100, it’s an excellent choice for compute-intensive workloads.

On the other hand, AWS is a GPU cloud provider with a broader range of GPU options, including older models and AMD GPUs. It’s also ideal for companies requiring massive scale and versatility across multiple use cases, such as AI, rendering, HPC, and graphics.

Ultimately, the choice between CoreWeave and AWS cloud services depends on your specific GPU needs, budget, and scalability requirements. You can also read our Lambda Labs review which is another solid option for your AI workloads.

CUDO Compute provides high-performance, cost-effective cloud GPU solutions, specifically designed to power your AI and machine learning projects. Sign up now!