TensorDock Review 2025: A Cost-Effective Cloud GPU Solution for AI and Gaming

In the ever-changing world of cloud computing the need for powerful and affordable GPUs has never been greater. TensorDock enters the scene as a new player in the cloud GPU market promising a compelling alternative to the big guys. With a focus on affordability and flexibility, TensorDock aims to serve AI developers, gamers, and anyone else who needs lots of compute power without the big price tag. But does it deliver? Let’s dive in and find out.

Table of Contents

Is Tensordock Worth It?

Tensordock is good for AI workloads, rendering, and cloud gaming. With many GPUs and competitive pricing it’s hard to pass up. But be aware: performance can be hit or miss since they use 3rd party hosts. And while it’s a great platform for experienced users, newbies might find it a bit tricky.

Affiliate Disclosure

We are committed to being transparent with our audience. When you purchase via our affiliate links, we may receive a commission at no extra cost to you. These commissions support our ability to deliver independent and high-quality content. We only endorse products and services that we have personally used or carefully researched, ensuring they provide real value to our readers.

You can easily rent from a wide selection of cost-effective NVIDIA and AMD GPUs to accelerate your workloads. Sign up now!

For a deeper understanding of CUDO Compute, take a look at this informative video:

Tensordock Pros and Cons

Pros:

- Affordable Pricing: Pricing is significantly lower than competitors.

- Wide GPU Selection: Options range from consumer GPUs like the RTX 4090 to high-end enterprise models like the H100.

- Global Availability: With 100+ locations across 20+ countries, you can deploy servers almost anywhere.

- Customizable Resources: Tailor CPU, RAM, and storage to your needs.

- No Hidden Fees: No surprise costs like ingress and egress fees.

- API Access: Easy server management through their well-documented API.

Cons:

- Inconsistent Performance: Due to third-party hosts, performance can vary.

- Resource Availability Issues: Stopping a server means you may not get the same resources when restarting.

- Not Beginner-Friendly: The platform is more suited for experienced users.

- Potential Privacy Concerns: The marketplace model using third-party hosts might not suit privacy-conscious users.

Tensordock Deep Dive: What You Really Need to Know

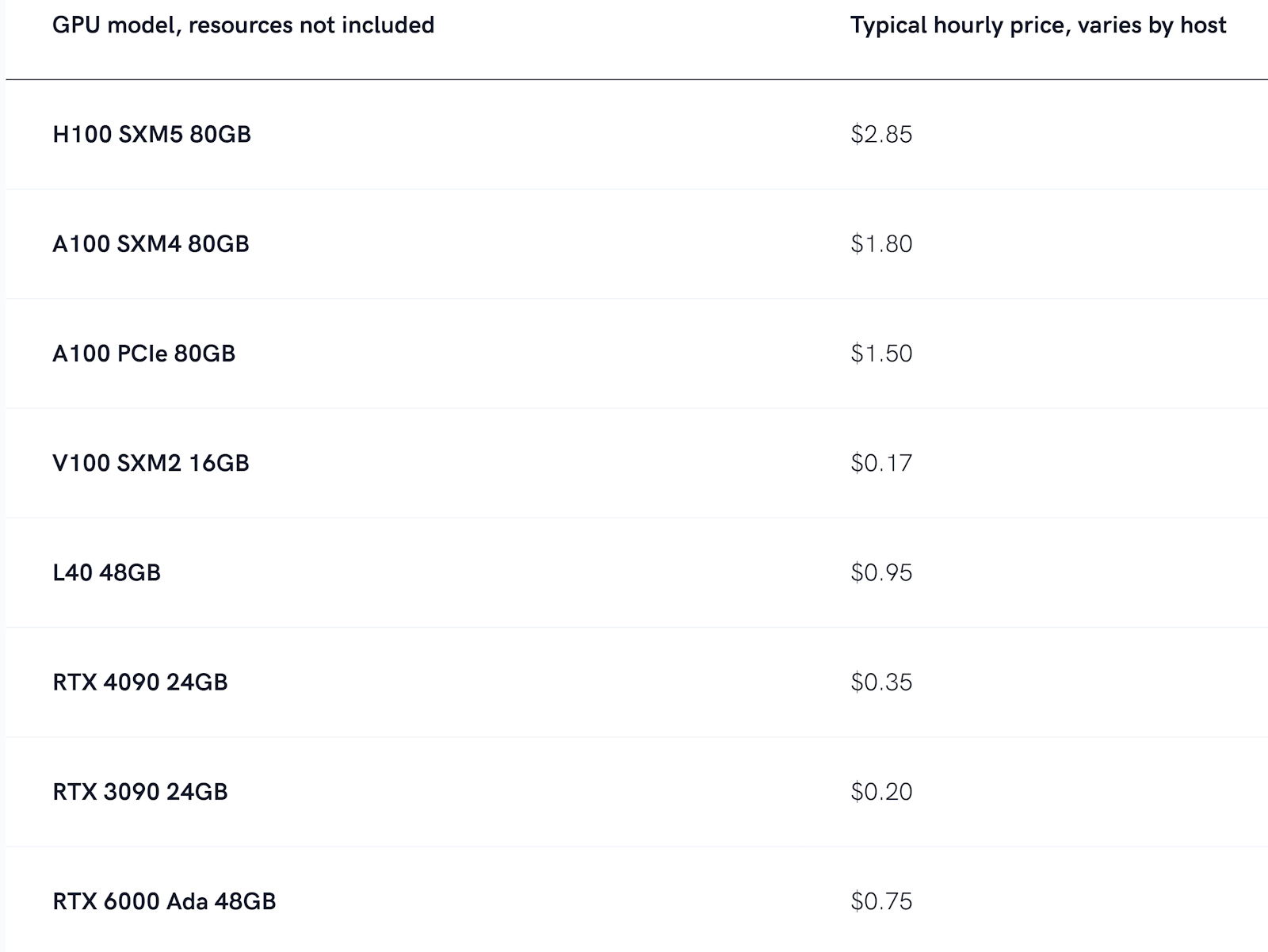

GPU Models and Pricing

When it comes to GPU selection, Tensordock doesn’t hold back. They offer a range of GPUs catering to both consumer-level and enterprise-level users, which makes them unique compared to other services. Let’s break down the pricing of some of their most popular models.

- H100 SXM5: $2.80/hour

This is Tensordock’s top-tier option, designed for high-performance AI training and inference tasks. If you’re working on deep learning or large-scale AI projects, this is the beast you want. - A100 SXM4: $1.80/hour

The A100 strikes a nice balance between performance and cost, making it ideal for AI inference and other computationally intensive tasks. - RTX 4090: $0.35/hour

A consumer GPU that’s a steal for tasks like image processing, rendering, or even cloud gaming. It’s not as powerful as the H100, but for certain tasks, it offers insane value for money.

The flexibility in pricing is one of Tensordock’s strongest points. With the ability to choose exactly what you need, you don’t get trapped in overpaying for resources you won’t use. Plus, for long-term users, you can unlock even better deals by committing to longer-term contracts.

CUDO Compute’s RTX A6000 starts from just $0.30/hour, providing a budget-friendly option for GPU-intensive tasks— Sign up now!

Infrastructure and Network

Tensordock’s infrastructure is global and scalable so it’s perfect for GPU heavy projects. They have access to 30,000 GPUs worldwide with hundreds available to deploy in 100+ locations across 20+ countries.

So no matter where you’re based you should be able to find a low-latency GPU server near you, whether in Europe, North America, or Asia. For projects that need distributed computing or want to minimize latency for real-time applications like cloud gaming or machine learning workloads, this is a big plus.

Their infrastructure is in Tier 3 and Tier 4 data centers which are known for their high security, reliability, and uptime guarantees. These data centers are designed to handle large-scale operations with minimal downtime so your workloads will run smoothly. Tensordock’s 99.99% uptime is largely due to this infrastructure. But there’s a key detail to be aware of: Tensordock doesn’t own all the hardware.

Instead, Tensordock operates on a marketplace model where 3rd party hosts rent out their GPUs through the platform. This marketplace model is what allows them to be competitive with pricing but it also introduces some challenges.

Since the hardware is managed by 3rd party hosts there’s a risk of performance variability. Not all 3rd party hosts maintain the same hardware performance or consistency so this can impact the user experience. This is something to be aware of especially if consistent high-performance computing is important for your projects.

CUDO Compute offers high-performance cloud GPUs tailored for AI, machine learning, and rendering at competitive prices. Sign up now!

Performance and Inconsistencies

When it comes to performance, Tensordock makes bold promises, including 99.99% uptime and the use of vetted hardware. On paper, this sounds great, but the reality can be a bit more complicated due to the nature of their marketplace model, where third-party hosts manage the GPU nodes. While this model allows for lower pricing, it also introduces some potential performance inconsistencies.

The main issue here is that not all third-party hosts are equal in terms of hardware quality and maintenance standards. Some users have reported that the performance of the same dedicated GPUs available can vary significantly depending on which host they’re connected to.

For example, a particular RTX 4090 from one host may perform slightly differently than the same model from another host, due to factors like server load, underlying infrastructure, or management practices. This variability can be frustrating, particularly for users running time-sensitive or resource-intensive tasks like AI model training, deep learning, or large-scale rendering projects.

Another challenge comes when you stop a server. Tensordock doesn’t guarantee that the same GPU resources will be available when you restart it. This means that even if you had a top-performing setup during one session, you might not get the same hardware—or even the same level of performance—next time.

While this may not be a huge issue for short-term workloads or one-off tasks, it becomes more problematic for long-term projects that require consistent performance over time. If you’re working on something that requires sustained, predictable power, such as long-term AI training or continuous rendering, this could potentially slow down your project or cause bottlenecks in performance.

For users who need rock-solid performance consistency, these fluctuations can be a major drawback. While the platform does its best to vet hosts and remove underperforming ones, the inherent nature of this marketplace model means performance variability remains a reality you need to factor in when choosing Tensordock.

Customizability and Billing

Tensordock’s a la carte pricing model is a breath of fresh air, especially if you’re tired of pre-configured templates from other providers. You can choose exactly how much CPU, RAM, and storage you need, and you’re billed only for what you use. This is a huge plus if you’re working on multiple projects with different resource requirements.

For example, you can get:

- 1 vCPU for $0.003/hour

- 1GB RAM for $0.002/hour

- SSD NVMe storage for as little as $0.00005/hour.

These prices are fantastic for users who need flexibility and don’t want to pay for pre-configured setups that force them to overcommit.

Launch an instance in minutes and access top-tier GPUs like the H100 or A100 PCIe for as low as $1.59/hour. Sign up now!

Ease of Use

Ease of use is a mixed bag with Tensor Dock. While the platform shines for experienced users—especially sysadmins or ML developers familiar with cloud infrastructure—it can feel a bit daunting for those new to the world of virtual machines and GPU servers. Tensordock advertises the ability to deploy a server in just 30 seconds, which is technically true. You can spin up spot instances quickly, but the overall user experience isn’t as intuitive as it could be for beginners.

The platform offers advanced features like Docker pre-installed and KVM virtualization, which give experienced users more control and flexibility. This is fantastic if you know what you’re doing and want to customize the environment to suit specific needs. However, these features might be a bit overwhelming if you’re just starting out. For those without a strong technical background, navigating the platform’s dashboard or configuring these settings could be confusing.

In comparison to some cloud providers that offer pre-configured environments or beginner-friendly interfaces, Tensordock could improve by offering more step-by-step guides or simplified options for less experienced users. If you’re a beginner, expect a learning curve, but once you get the hang of it, you’ll appreciate the customizability and power the platform offers.

Customer Support

Customer support is where Tensordock shows both strengths and limitations, depending on what you need. They offer basic support, but it’s not as comprehensive as some of their enterprise-focused competitors like Lambda Labs and large clouds. If you’re running mission-critical applications that require around-the-clock attention or rapid response times, you may find Tensordock’s support lacking. They don’t offer the kind of 24/7, hands-on service that some businesses might expect, especially those used to dealing with enterprise-grade providers.

However, for smaller projects or users who don’t require constant assistance, Tensordock’s support can be perfectly adequate. They provide email-based support, and response times are generally reasonable for non-urgent issues. The platform is designed with experienced users in mind, so for many, the need for constant support may not even arise.

One area where Tensordock stands out is in its API documentation, which is thorough and well-organized. This allows advanced users to manage and automate server deployments with minimal help, bypassing the need for frequent support interactions. While it’s not ideal for those who want high-touch customer service, Tensordock’s setup works well for users who are self-sufficient and comfortable troubleshooting on their own.

Scale your projects globally with CUDO Compute’s GPU clusters and enterprise solutions, designed for seamless expansion. Sign up now!

Privacy and Security Concerns

Privacy and security concerns are a notable consideration when using Tensordock, especially because of their marketplace model, where they resell GPU resources from third-party providers.

While they ensure that most of their own servers are housed in Tier 3 and Tier 4 data centers, known for their high security and robust infrastructure, the nature of relying on external hosts introduces potential risks. These data centers are designed to meet high uptime and security standards, but the fact that you don’t always know who is managing the hardware can raise privacy flags.

If you’re dealing with sensitive data or working on projects that have to comply with strict security regulations (such as GDPR or HIPAA), you’ll need to carefully evaluate whether Tensordock’s model fits your compliance requirements. Their use of third-party infrastructure means you’re essentially adding another layer of trust.

You’re not only trusting Tensordock to provide a secure platform but also the third-party hosts who manage the actual servers. This can be uncomfortable for users with mission-critical workloads or those who require complete control over their data environment.

While Tensordock does enforce standards on these hosts, such as vetted hardware and minimum performance expectations, the multi-layered infrastructure might not be ideal for users who prioritize privacy and end-to-end security control.

Global Reach and Availability

Global reach and availability are one of Tensordock’s biggest strengths. With over 100 locations in more than 20 countries, you can get a GPU server near you, reducing latency and performance.

This is especially useful for low-latency tasks like cloud gaming, real-time AI, and video streaming. With servers all over the world, you can choose locations near your audience or project needs and have a smoother and more efficient user experience.

The global reach also gives businesses or developers with international operations flexibility. You can scale your projects across different regions without worrying about server availability or latency. Whether you’re in North America, Europe, Asia or beyond, there’s likely a GPU server near you that can meet your needs. This kind of distributed infrastructure is crucial for projects that require real-time data processing or need to handle workloads across different time zones and markets.

For those working on global projects or serving international customers, being able to deploy servers near the end-users improves performance, reduces delays and ensures high availability, making Tensordock a great option for businesses that need global scalability.

Save money with long-term discounts, with options to commit to one, three, or six months for even greater savings. Sign up now!

Value for Money

Value for money is where Tensordock really shines in the cloud GPU market. Their pricing is way lower than big clouds like AWS, Google Cloud, or Azure so it’s a great option for users with GPU-heavy workloads like AI model training, rendering, or cloud gaming. For example, you can rent a high-end RTX 4090 for $0.35/hour or V100 for $0.17/hour. There are no free credits though like some big clouds offer.

These prices can be a game changer for developers, researchers, or startups who need high-end performance but can’t afford the sky-high prices of established clouds. Whether you’re training an AI model, rendering complex graphics, or running GPU-intensive simulations with NVIDIA drivers, Tensordock has the best deal for you at a fraction of the cost.

Also, Tensordock’s pay-per-use model means you only pay for what you use. There are no hidden fees and their transparent pricing means you won’t get hit with unexpected charges for data ingress/egress or unused resources. For long-term projects, there’s also the possibility of further cost savings with long-term commitments so Tensordock is even better for those who need sustained GPU access. Overall Tensordock delivers great value for those looking for affordable powerful cloud GPU solutions.

Final Verdict: Is Tensordock Worth It?

So, is Tensordock worth it? It depends. If you’re looking for affordable, flexible GPU power and you’re comfortable with a bit of performance variability, then yes, Tensordock offers incredible value. However, if you’re a beginner or need enterprise-level performance with guaranteed consistency, you might want to weigh your options carefully. The pricing, customizability, and global reach are standout features, but the inconsistent performance and privacy concerns may hold you back from fully committing.

In the end, Tensordock is an impressive cloud GPU provider for the right kind of user—just make sure you’re that user before diving in.

Deploy large language models (LLMs) or PyTorch on CUDO’s cutting-edge NVIDIA GPUs, making AI deployment easier than ever. Sign up now!