RunPod vs Lambda Labs: Infrastructure, Pricing, and Security Compared

If you’re deep into AI workloads, you’ve probably heard about RunPod and Lambda Labs. These two GPU cloud services promise seamless performance for machine learning models, but which one truly delivers? That’s exactly what we’re breaking down in this Runpod vs Lambda Labs comparison here. Whether you’re after lightning-fast deployments or just need to train massive models for days on end, both platforms offer powerful tools to get the job done.

With RunPod, you’re looking at instant scalability, no-ops overhead, and some impressive serverless features like sub-250ms cold starts. On the other side, Lambda Labs stands out with its 1-click clusters and cutting-edge NVIDIA GPUs available on-demand. Both are strong contenders, but which one fits your workflow? Stick around as we dig into what each service brings to the table, helping you decide which one’s the better fit for your machine learning and AI needs.

Affiliate Disclosure

We prioritize transparency with our readers. If you purchase through our affiliate links, we may earn a commission at no additional cost to you. These commissions enable us to provide independent, high-quality content to our readers. We only recommend products and services that we personally use or have thoroughly researched and believe will add value to our audience.

Table of Contents

RunPod vs Lambda Labs: An Overview

Lambda Labs

Lambda Labs is known for offering a diverse array of GPU instances designed to handle deep learning, machine learning, and AI research. With robust infrastructure, Lambda Labs is popular among data scientists and engineers who require compute-intensive workloads on demand. Lambda’s competitive pricing and cloud-based virtual machines make it an attractive choice for both startups and established enterprises. Lambda GPU Cloud is highly optimized for performance, providing a scalable infrastructure solution for businesses aiming to handle large data analytics workloads and tasks such as automatic speech recognition.

RunPod

RunPod differentiates itself by providing a flexible and user-friendly interface that allows users to deploy and manage their workloads seamlessly. RunPod is tailored for cloud transformation and is popular among startups and academic institutions for its easy deployment and robust feature set. With a unique autoscaling architecture, RunPod supports a wide array of GPU models for compute-intensive workloads, ranging from basic tasks to sophisticated AI models. The service integrates well with other Google Cloud services and Amazon Web Services (AWS), offering a range of GPU models suited for specific workloads. It excels at allowing users to run code in the cloud as easily as they would locally.

Searching for flexible GPU cloud solutions? Explore CUDO Compute for scalable options tailored to meet your AI and HPC needs.

To learn more about CUDO Compute, kindly watch the following video:

Key Similarities

- Both services support a wide range of GPU models, from NVIDIA’s A100s to AMD’s MI300X.

- Each offers data security and compliance for sensitive machine-learning models.

- They both provide cloud-based virtual machines for training, inference, and data analytics.

- Each platform provides scalable solutions for large-scale cloud transformation.

GPU Models and Pricing Comparison

The choice of GPU is one of the most important factors when selecting a cloud provider. Both RunPod and Lambda Labs offer a variety of GPU models optimized for different kinds of workloads, from AI and machine learning to more general compute-intensive workloads.

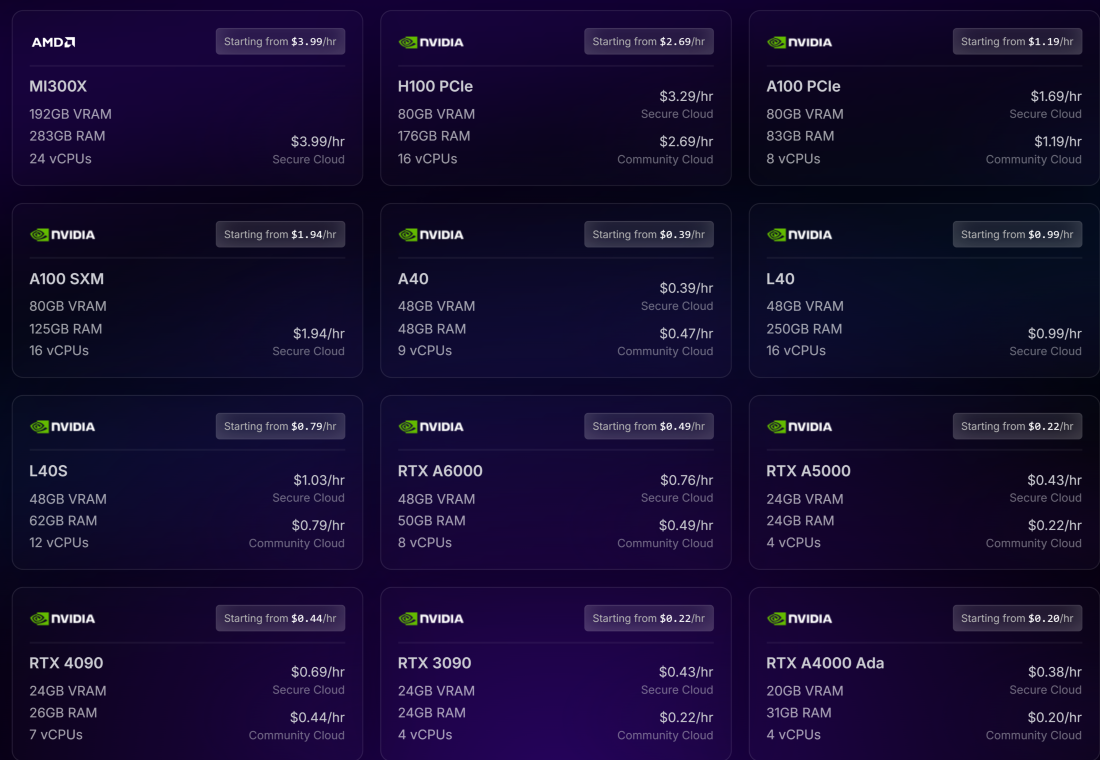

RunPod GPU Models and Pricing

RunPod offers a wide range of GPUs suitable for deep learning and data analytics workloads, with pricing options that cater to different budgets and project sizes.

- NVIDIA H100 PCIe: Starting at $2.69/hour for 80GB VRAM, 176GB RAM, and 16 vCPUs. This option is highly recommended for data analytics and automatic speech recognition tasks due to its memory-optimized architecture.

- NVIDIA A100 PCIe: Starting at $1.19/hour for 80GB VRAM, 83GB RAM, and 8 vCPUs. This model is great for handling high-performance deep-learning models.

- NVIDIA A40: Starting at $0.39/hour for 48GB VRAM, 50GB RAM, and 9 vCPUs, making it a cost-effective option for smaller-scale AI projects.

- AMD MI300X: Starting at $3.99/hour for 192GB VRAM, 283GB RAM, and 24 vCPUs, ideal for large-scale compute-intensive workloads.

These models are available across RunPod’s global data centers, allowing users to spin up cloud-based virtual machines quickly and at scale.

Pricing Flexibility

RunPod offers secure cloud and community cloud pricing. The secure cloud is tailored for users with strict security requirements, while the community cloud offers lower pricing options. This makes RunPod a highly flexible option for both startups and enterprises looking for cost-efficient GPU usage.

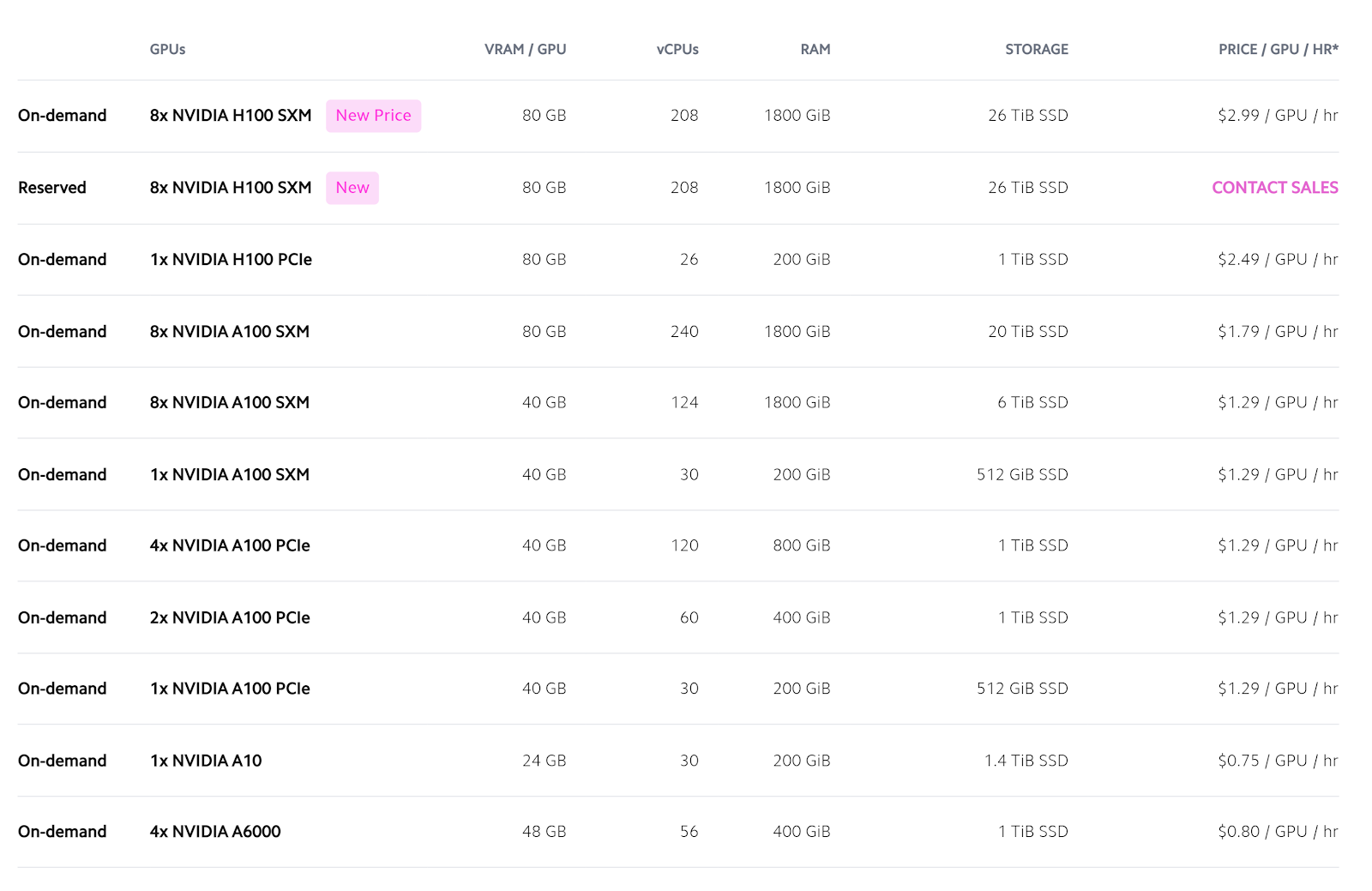

Lambda Labs GPU Models and Pricing

Lambda Labs focuses heavily on high-performance GPUs designed for AI research, deep learning, and machine learning.

- NVIDIA H100: Lambda Labs offers this model at competitive pricing. It’s suitable for compute-intensive workloads such as automatic speech recognition and interactive voice response systems.

- NVIDIA A100: Available for high-intensity workloads like transcribe human-level speech tasks. Lambda’s pricing is comparable to RunPod, offering it at approximately $1.50 to $2.00/hour, depending on the configuration.

- NVIDIA RTX A6000: With 48GB VRAM and robust performance, this GPU is a cost-effective solution for data analytics and more computationally demanding tasks.

- AMD MI100: A powerful choice for data matching tool tasks, especially in neural network research, priced around $2.50/hour.

Cost Efficiency and Model Versatility

When comparing Lambda GPU cloud vs. RunPod, Lambda GPU Cloud is generally more focused on research-specific workloads. While RunPod offers more variety in terms of GPU models for general-purpose computing, Lambda Labs excels at providing highly optimized environments for data scientists and researchers who require scalable infrastructure solutions.

Need powerful GPUs with transparent pricing? Discover CUDO Compute’s solutions to efficiently optimize your workloads.

RunPod vs Lambda Labs: Architecture and Ease of Use

RunPod’s Flexible Deployment Model

One of the key selling points of RunPod is its user-friendly interface that allows for flexible deployment. Users can spin up GPU pods in seconds with cold-start times reduced to milliseconds, making it a go-to option for those who need immediate access to computational power.

- Auto-scaling Capabilities: RunPod’s autoscaling infrastructure allows users to deploy anywhere from 1 to 100 GPUs based on workload demands. This is a significant advantage for those running large models where demand may fluctuate. Additionally, it integrates seamlessly with Google Cloud and AWS, making it a strong contender for cloud services like vertex data labeling and Google Cloud’s sophisticated collective intelligence network.

- Customizable Environments: RunPod supports both pre-configured environments and custom containers, offering templates for popular AI tools like PyTorch and TensorFlow. This makes it easier to start a project with minimal setup time, especially for academic institutions or startups.

- Network Performance: With 10PB+ network storage and low-latency connections, RunPod is optimized for handling large datasets, making it suitable for tasks like data matching tools or workloads that require compute-intensive workloads. The company also ensures 99.99% uptime, which is critical for enterprise-level applications.

Lambda’s Streamlined Research-Oriented Architecture

Lambda Labs focuses on providing highly optimized environments for AI research. Their infrastructure is designed specifically to handle AI and ML workloads, with features like automatic cluster orchestration and data center-level support. Lambda also offers bare-metal instances for users who need direct access to hardware without the overhead of virtualization.

- Pre-installed Machine Learning Tools: Lambda’s infrastructure comes with popular machine learning libraries pre-installed, including PyTorch, TensorFlow, and Keras. This makes it ideal for those who want to focus more on model development and less on infrastructure management.

- Data Center Locations: Lambda Labs also has a global network of data centers, ensuring low latency for a variety of workloads. This makes it easier to handle compute-intensive workloads, as it predicts global consumer preferences or performs interactive voice response system tasks.

User Interface and Integration

Lambda Labs is slightly more research-focused, with less emphasis on user-friendly interfaces and more on power users who require full control over their computing infrastructure. In contrast, RunPod’s integration with tools like AWS and Google Cloud and their easy-to-use CLI makes it more versatile for non-experts and those who need to deploy at scale with minimal hassle.

Supercharge your AI projects with CUDO Compute’s high-performance GPUs. Start scaling your applications seamlessly today by visiting here.

Performance Benchmarks

Lambda Labs Performance

In terms of raw computational power, Lambda Labs excels when it comes to handling large-scale AI workloads. Lambda’s infrastructure is optimized for AI research, making it suitable for compute-intensive workloads such as sophisticated collective intelligence networks and data analytics.

- Model Training: Lambda’s infrastructure is optimized for long training times, with the ability to handle tasks that take up to 7 days. The inclusion of high-performance GPUs like the NVIDIA H100 makes it a strong choice for large language models (LLMs) or other memory-optimized models.

- Data-Intensive Workloads: Lambda also excels in handling data analytics and data matching tools, especially when used for tasks requiring transcribing human-level speech or predicting global consumer preferences.

RunPod Performance

RunPod’s performance is equally impressive, particularly for users who need quick, scalable infrastructure solutions.

- Cold-Start Times: RunPod offers sub-250ms cold-start times, a significant advantage for applications where instant deployment is critical. This is especially useful for users who need to handle compute-intensive workloads on demand without waiting for their GPUs to warm up.

- Scaling Capabilities: With the ability to autoscale from 0 to 100 GPUs in seconds, RunPod offers flexibility in managing fluctuating workloads. This makes it ideal for tasks that require high scalability, such as cloud transformation or deploying a data-matching tool.

Use Case Suitability

For applications where flexibility and fast deployment are critical, RunPod has an edge due to its cold-start times and autoscaling capabilities. However, Lambda Labs is better suited for longer, more intense AI workloads that require robust infrastructure over extended periods.

Searching for affordable GPUs? Check out CUDO Compute’s budget-friendly options, built to handle even the most demanding tasks.

Data Security and Compliance

In today’s digital landscape, data security and compliance are more critical than ever, especially when it comes to cloud-based services. Both RunPod and Lambda Labs recognize the importance of safeguarding sensitive data and ensuring that their platforms meet the stringent standards set by regulatory bodies.

They adhere to industry-leading security protocols, including GDPR (General Data Protection Regulation), SOC 2 (System and Organization Controls), and ISO/IEC 27001 (Information Security Management). These certifications reflect their commitment to protecting user data and maintaining the integrity of the services they offer.

RunPod’s Secure Cloud Architecture

RunPod’s secure cloud architecture is designed with high-level security in mind, making it an excellent choice for industries with sensitive data needs, such as healthcare, finance, and legal services. It offers dedicated instances, meaning users have full control over the virtual machines they utilize, reducing the risks associated with shared environments.

- Encryption at Rest and In Transit: RunPod employs encryption methods to protect data both at rest and during transit, ensuring that data remains confidential and protected from unauthorized access. This means that whether data is stored on their servers or moving between locations, it remains secure.

- Isolated Workloads: RunPod further enhances security by isolating workloads. This feature is especially crucial for organizations that handle personal health information (PHI), financial records, or other confidential materials where compliance with standards like HIPAA (Health Insurance Portability and Accountability Act) and PCI DSS (Payment Card Industry Data Security Standard) is necessary.

- Role-Based Access Control (RBAC): Another layer of security is provided through role-based access control, ensuring that only authorized personnel have access to sensitive information. This allows businesses to implement stringent internal security measures while maintaining operational efficiency.

RunPod’s emphasis on compliance and its comprehensive security architecture make it an ideal platform for enterprises that require stringent security measures to meet regulatory standards and protect sensitive data.

Lambda’s Research-Oriented Security

Lambda Labs focuses on providing secure environments that cater to the needs of research institutions and AI-focused organizations, which often handle proprietary datasets and intellectual property that must remain protected throughout the data lifecycle.

- Data Encryption: Like RunPod, Lambda Labs utilizes encryption at rest and in transit to safeguard user data. Encryption protocols ensure that data cannot be accessed by unauthorized users, making it ideal for companies involved in artificial intelligence research, where the loss or exposure of sensitive training data could compromise intellectual property or competitive advantage.

- Security for Collaborative Environments: Lambda Labs supports secure, collaborative environments where multiple researchers can work on the same project while ensuring data protection. Through secure authentication methods, such as multi-factor authentication (MFA), Lambda ensures that only authorized users can access sensitive research materials.

- Compliance for Academic and Research Projects: Many academic institutions must comply with specific research data regulations. Lambda Labs’ secure infrastructure is compliant with IRB (Institutional Review Board) and NIH (National Institutes of Health) data management policies, making it a trusted partner for universities and research facilities. Whether the data involves clinical trial results, genomic information, or AI model training data, Lambda Labs provides an infrastructure that meets the highest standards of data security.

- Data Segmentation and Controlled Access: Lambda also offers data segmentation, which allows institutions to compartmentalize sensitive data so that access can be tightly controlled. This feature is particularly valuable for large-scale research projects that involve multiple teams, ensuring that only specific groups can access certain parts of the data.

Lambda Labs not only provides powerful cloud GPU solutions but also ensures that the security of proprietary research and sensitive datasets is uncompromised, offering peace of mind to its users in the research and development sectors.

Runpod vs Lambda Labs: Conclusion

In choosing between RunPod and Lambda Labs, the decision largely depends on the specific needs of your project. If you need a user-friendly interface with flexible deployment options and fast cold-start times, RunPod is the better choice. It’s particularly well-suited for users who require scalable infrastructure for on-demand compute-intensive workloads.

On the other hand, Lambda Labs offers a more research-focused environment with powerful GPU options optimized for AI and ML workloads. It’s an excellent choice for long-term projects that require memory-optimized models or data-intensive workloads.

Ultimately, both platforms offer robust cloud GPU services, but their unique features and pricing structures make them better suited for different types of users. RunPod excels in flexibility and scalability, while Lambda Labs is the go-to for those who need high-performance GPUs for specific research applications.

Boost your computing power with CUDO Compute. Discover their advanced GPU services by visiting here.